Editorial

Date

Partner(s)

Imagine more realistic and higher quality 3D models through applied research. Olivier Leclerc, a researcher-programmer at CDRIN, with the help of Pierre Lalancette, former 3D animation and image synthesis teacher at Cégep de Matane, has developed an innovative method: Neural Network-Enhanced 3D Reconstruction. This approach uses deep learning to refine photogrammetry, particularly for underwater 3D. Discover how this new process, integrated into Meshroom software, can transform your digital projects and immersive experiences, including the production of research and scientific outreach tools.

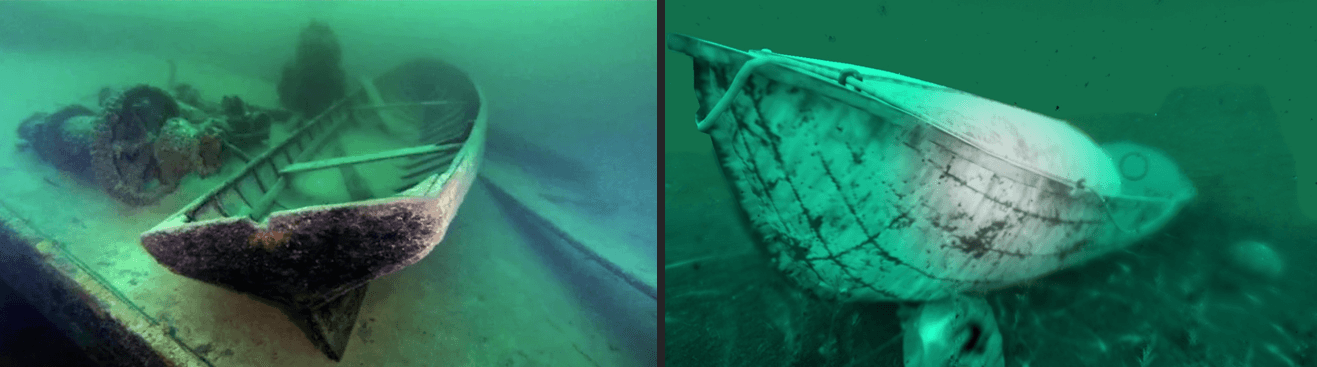

In 3D reconstruction, accurately understanding the distances to objects in a three-dimensional space (known as depth map estimation) is essential for precise model creation, particularly in complex environments such as underwater photogrammetry for archaeology. We observed this through the virtualisation prototype of the Scotsman shipwreck, carried out in 2021-2022, when CDRIN acted as a technology partner in an immersive museum experience project initiated by the IRHMAS.

In the context of scientific valorization such as underwater archaeology, or in other fields like engineering, entertainment, or healthcare, the precision of 3D models has become essential to support research and scientific communication.

WHAT IS PHOTOGRAMMETRY?

Photogrammetry, which allows for the modeling of a 3D shape from photographs taken from different viewpoints, is a technology used to reduce the production time of 3D elements and multiply the possibilities of photorealistic reproduction in various fields such as entertainment, land development, engineering, manufacturing, archaeology, or healthcare. This technique reduces time-consuming and tedious tasks. Moreover, technical advancements in calculating depth estimation reach their limits when generating continuous surfaces, especially in challenging image acquisition conditions or when images are degraded.

Moreover, while significant progress has been made in depth estimation, there are still limitations, especially when generating smooth surfaces from images acquired in challenging environments or with poor quality.

Deep Learning for Enhanced 3D

Our research, entitled “Improved 3D Reconstruction using Neural Networks,” combines innovative depth estimation techniques with deep learning, significantly enhancing 3D reconstruction for various photogrammetry applications. We have developed a novel method for depth estimation from multiple images of the same scene. We have termed this problem “stereo vision” for two images or “multi-view stereo” (MVS).

The developed system increases the accuracy and robustness of the 3D reconstruction process and can be used with either a generalist model or the one developed by CDRIN in collaboration with IRHMAS. In parallel with the development of the process, we created a synthetic dataset and trained a new neural network, all adapted to the underwater environment.

Entering a new era of 3D reconstruction

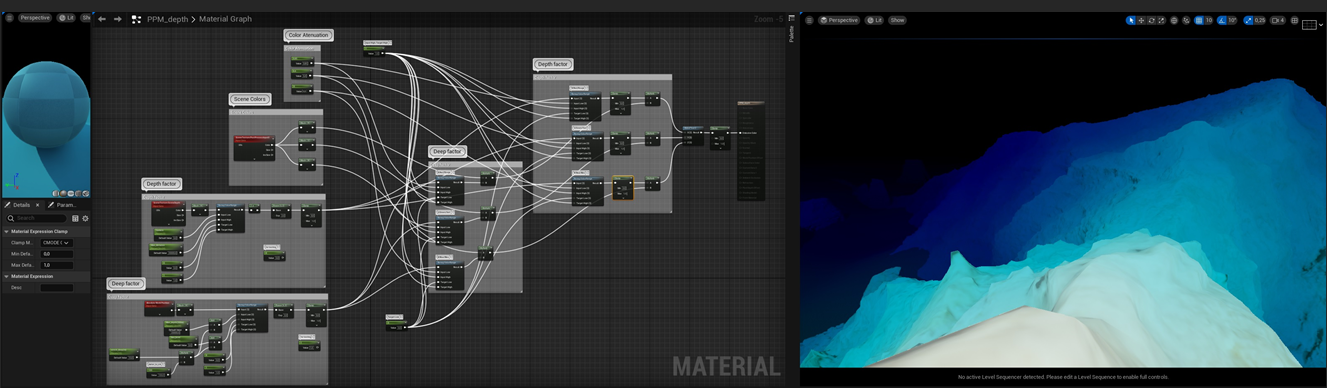

To achieve this, we began by analyzing existing methods. We first evaluated the currently used algorithm (SGM) and identified its limitations. After thorough research, we selected two promising AI techniques: HitNet and IGEV. This process required a careful analysis of Meshroom’s source code and the implementation of a compilation procedure. The main objective was to understand the functioning of Meshroom’s ‘DepthMap’ node to effectively replicate it in our system.

HitNet requires image manipulation to align pixels and was successfully integrated using OpenCV. However, difficulties arose when integrating the outputs with Meshroom. On the other hand, the open-source IGEV technique allowed for easy adaptation and offered a standalone MVS solution.

The chosen inference engine, LibTorch, requires flexibility trade-offs but is functional with multiple versions of the exported model. The implementation of the new module involves converting and structuring data into matrices and tensors, resulting in outputs usable for 3D reconstruction.

AI-Powered Synthetic Data Generation for Underwater Archaeology

We then moved on to the synthetic data generation phase, training an AI model and utilizing the 3D engine Unreal Engine 5.1 and its associated plugins to obtain the necessary passes.

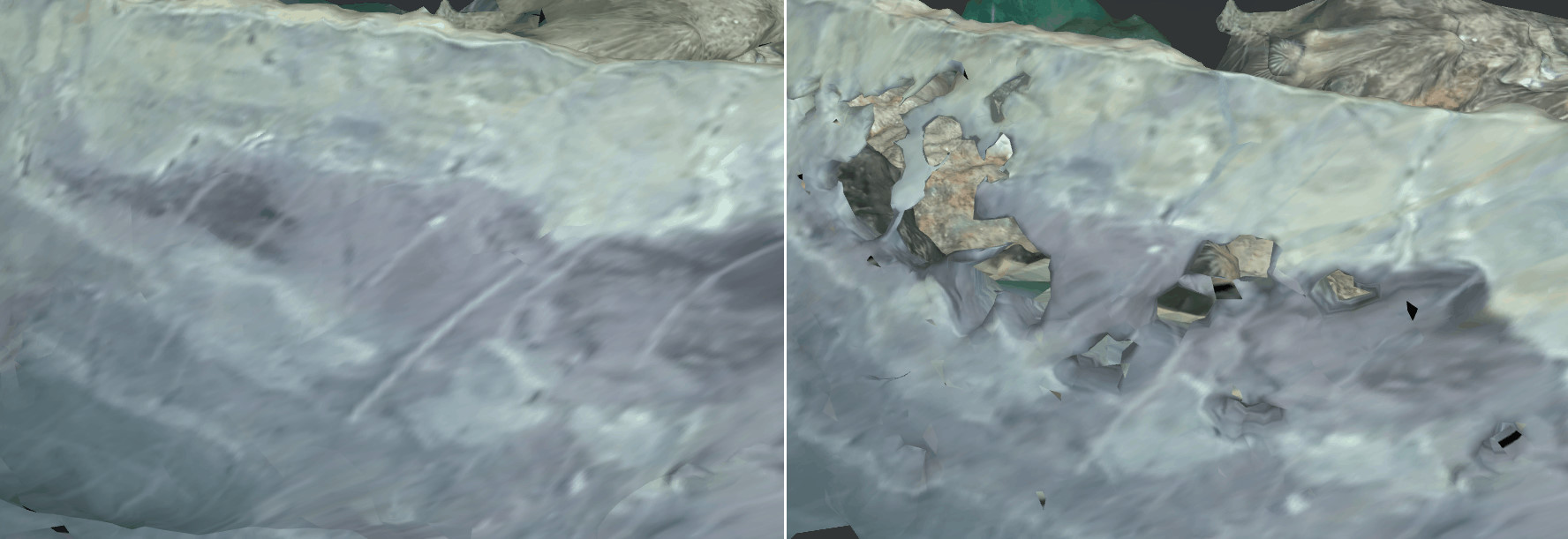

We created an underwater scene using Megascan textures and modeled a boat. A specific material to simulate underwater vision was developed, following a complex methodology to simulate light absorption.

We generated basic sequences for preliminary tests, followed by meticulous work on Depth of Field and other effects to simulate underwater image capture. Over 14,000 images were produced, including various seabed problems and states, with the participation of student Aly Henri for the modeling of the “Scotsman” boat.

Retraining the AI Model

To enhance the AI model’s performance in challenging environments, particularly underwater settings with their distortions and turbidity, we undertook a retraining process. This required converting the images generated by the synthetic data module into a format compatible with the IGEV method.

A Module to Integrate into Meshroom Software

After several stages of transformation and development of specific modules, we successfully implemented an advanced depth estimation technique in the Meshroom software. Moreover, we trained a version adapted to the underwater environment using our own synthetic data. Our next step will be to propose our solution to the Meshroom development team for potential integration into the official software.

Simplified Access to 3D Reconstruction

As the equipment required for high-quality scans is expensive, and the capture and reconstruction process is laborious, our solution on an open-source software like Meshroom, which allows for faster and higher-quality digitization of real-world elements, offers better access to 3D reconstruction for small businesses and cultural organizations.

For example, the material produced for simulating seabeds has been used in a 3D presentation project of the city of Matane, contributing to an ongoing community project.

This technological advancement can find applications in fields such as land development, engineering, manufacturing, archaeology, and museology.

SKILLS TRANSFER IN EDUCATION

Le projet de recherche du CDRIN permet aux étudiant.es de modélisation du Cégep de Matane de créer des modèles plus robustes à partir de moins de photos de qualité inférieure (la plupart de la communauté étudiante utilise son téléphone intelligent pour créer leur échantillonnage de photos). Ils ou elles peuvent opérer maintenant plus rapidement vers des modèles fonctionnels. De plus, la méthodologie du projet a été présentée à des étudiant.es en VFX pour les guider dans leur démarche de résolution de problèmes, en mettant l’accent sur la fixation d’objectifs, les étapes à accomplir, la résolution des obstacles et l’éthique de la collaboration en recherche.

The CDRIN research project enables modeling students at Cégep de Matane to create more robust models from fewer, lower-quality photos (most students use their smartphones to create their photo samples). They can now operate more quickly towards functional models. Additionally, the project’s methodology has been presented to VFX students to guide them in their problem-solving approach, emphasizing goal setting, steps to be taken, obstacle resolution, and the ethics of collaborative research.