Editorial

Date

CVPR, the essential conference for computer vision, once again offered a fascinating glimpse into the technological advancements that will shape the future of many fields, including digital creativity. Two of our researchers, Olivier Leclerc and Shaghayegh (Sherry) Taheri, had the opportunity to attend and share their impressions on emerging technologies, their discoveries, and the potential impact of these innovations on the digital creativity sectors. Delve into their thoughts on diffusion models, synthetic data generation, 3D reconstruction, creative AI, and much more. The researchers also share their recommendations for business in the field.

What motivated you to attend CVPR 2024, and what specific goals did you have in mind for this event?

Olivier Leclerc: I attended last year and found it very inspiring, even though I missed the first part (workshops). This year, I wanted to have the full experience and get a sense of the entire field, to help me understand where the vision community is focusing its attention. I find it provides me with an overview and inspiration that lasts all year long as I explore new ideas to incorporate into my work.

Shaghayegh (Sherry) Taheri: I was excited to attend CVPR because it’s a top conference in computer vision where I could learn about the latest advancements in the field and connect with leading experts (it may sound cliché, but it’s true). My main goal was to gain practical insights that could be applied to our current projects.

What were the key highlights of CVPR 2024 for you, and why do you consider them significant for the digital creativity industry?

Olivier: I have to say, the breadth of applications for diffusion models surprised me. People are exploring every way they can be used, and it goes far beyond image generation. For example, they can recreate unseen parts during 3D reconstruction to compensate for suboptimal pictures, making the photogrammetry process more forgiving.

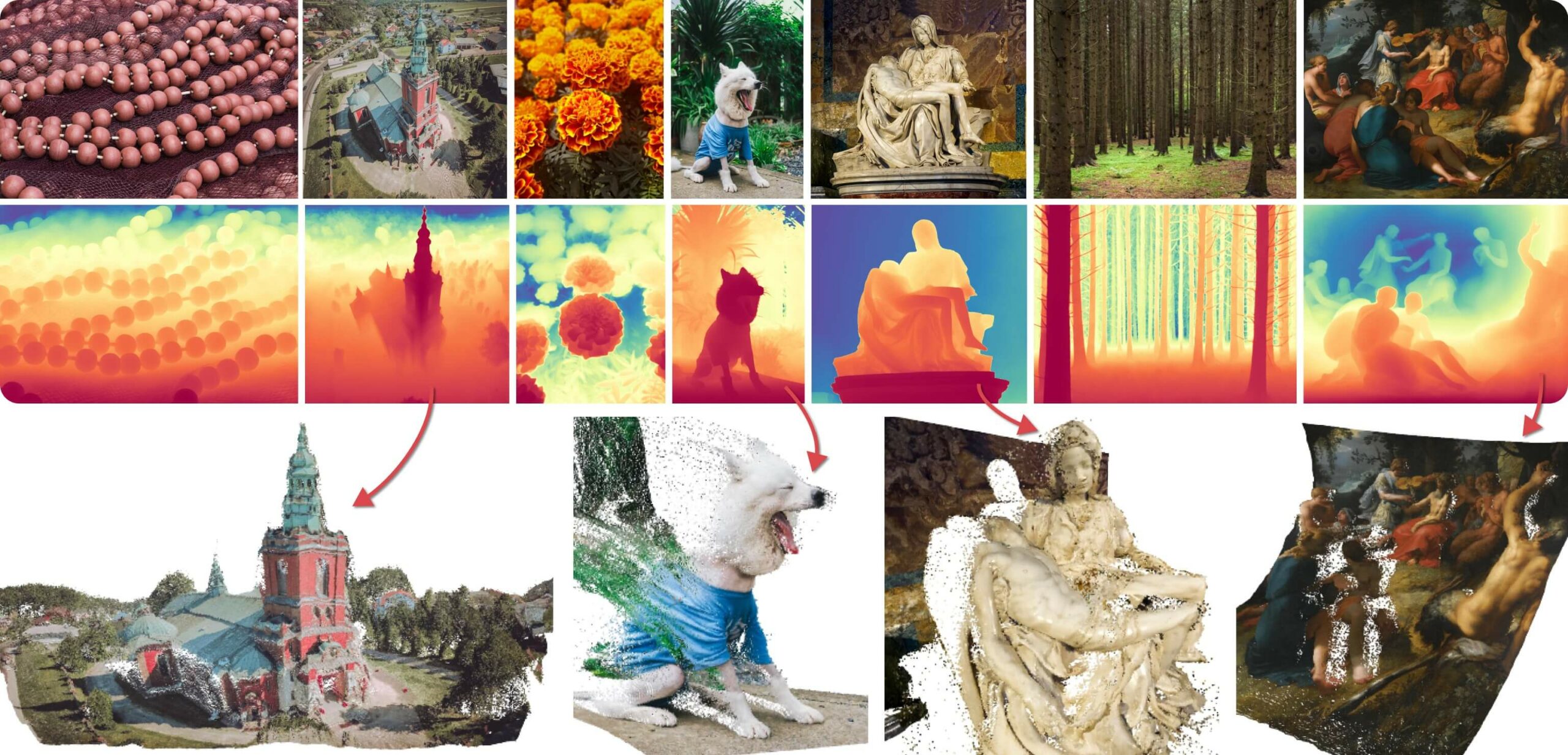

Sherry: I was impressed by the diverse research and advancements in diffusion models, vision-language models, synthetic data generation, 3D generation, and generative animation. I found it particularly interesting to see the innovative repurposing of diffusion models. For instance, the paper Repurposing Diffusion-Based Image Generators for Monocular Depth Estimation demonstrates how the extensive knowledge embedded in stable diffusion can be utilized for monocular depth estimation. Another example is the DiffusionPoser method, which showcases the reconstruction of human motion in real-time from arbitrary combinations of IMU sensors by employing an inpainting denoising algorithm.

Were there any unexpected insights or trends that you discovered during CVPR 2024 that you think will influence our industry?

Olivier: Something I learned there was the amount of effort going into body interpretation, likely related to the VR and metaverse trend. I was surprised that models can predict leg movements without seeing them or having any sensors on them, simply by correlating head and hand movements with real-world full-body human behaviors. There’s also great progress on markerless motion capture.

Sherry: One of the most intriguing talks I attended was “N=0: Learning Vision with Zero Visual Data.” It explained how LLM models, trained on non-visual datasets, can make semantic judgments about images without ever seeing them. The idea was explored further in the paper The Platonic Representation Hypothesis. I think the ability of LLMs to understand images without direct visual training is fascinating and holds great potential for future applications.

Which emerging technologies or innovations showcased at CVPR 2024 do you believe will have the most impact on our field (video games, VFX, animation, and immersion)?

Olivier: There were incremental improvements on virtually all subjects. I remember one paper named SpiderMatch, where they’re able to do very good shape matching between humanoids and animals. I also saw a demonstration of a camera that does “in-pixel computing”, which enables AR so fast that there’s no perceptible delay even in very fast movements.

Sherry: I see a lot of potential in the 3D generation research. Although it’s not yet production-ready, it’s improving quickly. Once it reaches the desired quality, it will likely have a significant impact in these fields.

How has attending CVPR 2024 impacted your role, and what insights have you gained that you plan to integrate into our ongoing and upcoming projects at CDRIN?

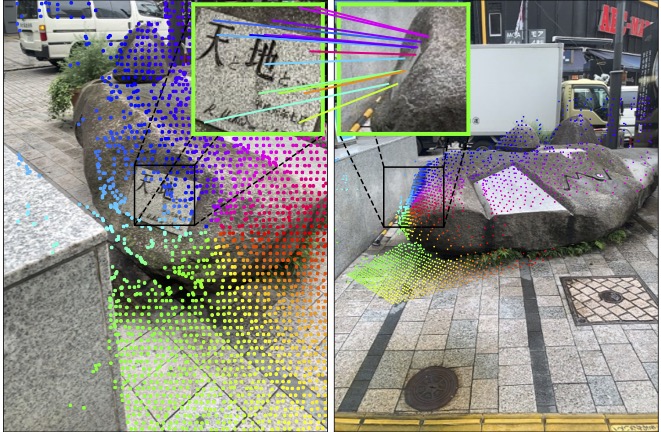

Olivier: I looked for the best innovations in camera pose estimation (a prerequisite step for NeRFs and Gaussian Splatting). I saw the DUSt3R/MASt3R paper and talked to the authors, and went to the Image Matching Challenge Workshop. There are excellent contributions in this field that I’m eager to put to use!

Sherry: Apart from the great insights into deep learning architectures, image and video understanding, GenAI, multimodal deep learning, and vision language models, a key takeaway was the critical role of data. Often, data is even more crucial than the AI models themselves. The advancements in synthetic data generation and dataset distillation presented at CVPR are particularly important, and I plan to incorporate these into my work at CDRIN.

How does the innovation landscape in digital creativity compare to what you witnessed at CVPR 2024?

Olivier: I think there’s a lot of usable ideas flying in all directions, and I see companies providing more and more good implementations within their software or cloud offerings. But by doing this, they capture the value and risk obscuring future progress. We need to stay up-to-date and make sure we understand what’s happening in order to freely develop or modify those technologies to suit our needs.

Sherry: Digital creativity and the advancements from conferences like CVPR are really interwined now. Companies making digital tools are also publishing research papers, showing how important academic research is in pushing this field forward. For our future strategies, this means we should keep building on the latest cutting-edge research.

How do you envision the impact of the emerging technologies showcased at CVPR on the broader digital intelligence landscape, and what steps do you think we should take to stay ahead in this rapidly evolving field?

Olivier: Large foundation models are too expensive to train for us to compete. However there’s a lot we can do, provided we take care of structuring and strategically sharing our data. I think the key is in customization and small models (edge computing), as well as smart usage of larger models.

Sherry: I believe we should continue actively integrating new technologies into our development processes while optimizing our resource utilization and organizing our data more efficiently. I am particularly interested in exploring techniques such as data and model distillation. Additionally, collaborating with other research centers and universities can help us stay ahead.

Our recommendations for digital creativity business, regarding areas to explore based on our insights from CVPR 2024:

AI tools are revolutionizing digital creativity across many aspects. Use 2D generative models like Stable Diffusion for high fidelity content creation. Stay updated on the fast-growing field of 3D generation, including methods like Gaussian splatting.

If your company faces data scarcity, explore synthetic data generation. This field is advancing rapidly, with generative models being used to create new datasets. Examples from CVPR include using human motion generation to improve autonomous vehicle performance in detecting pedestrian behavior, and the SyntaGen workshop competition on creating high quality datasets for semantic segmentation.

Check out Gaussian splatting (the successor to NeRFs), they are getting easier to generate using fewer images (as few as 2 in pixelSplat). There’s also unsupervised or data augmentation techniques that can perform well with scarce data for object detection and segmentation. Lastly, if you work with human bodies, there’s a lot to see in terms of motion capture, pose detection, shape matching, movement prediction and more.